Consider this: In 2022, IBM’s Global AI Adoption Index revealed that 35% of businesses had already welcomed AI with open arms, with another 45% on the brink of jumping on board.

And this was before the release of cutting-edge AI apps like ChatGPT, Google Bard and Midjourney – all of which are now well within the reach of businesses worldwide, and boosting productivity like never before.

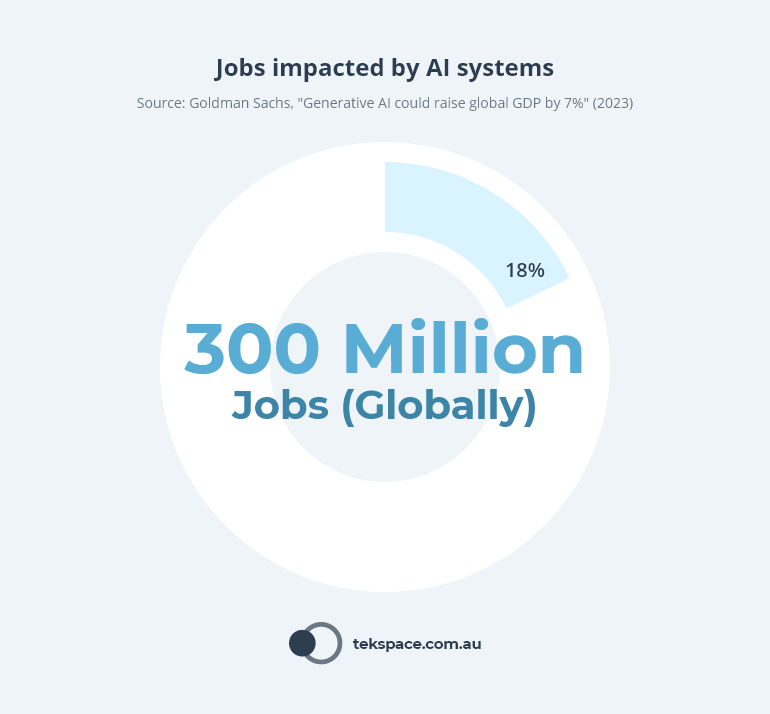

To put this into perspective, Goldman Sachs estimates that AI apps could reshape nearly 18% of all work globally – that’s around 300 million jobs.

However, it’s not all rosy. Powerful as these tools are, they can be a double-edged sword.

Because with these AI apps come new, substantial data security risks, lurking around the corner.

From our observations at Tekspace, businesses have recently tackled these risks in one of two ways:

- Block everything.

- Allow everything (i.e.: do nothing).

But finding the right solution to secure your data is rarely a matter of choosing between black or white. The challenge for IT and business leaders is to navigate these risks without stifling innovation or productivity.

This article isn’t about picking sides, but about giving you the practical steps to handle these emerging AI security risks.

But before we jump in, make sure you get a copy of our free ‘AI and Sensitive Data Policy’ template for governing the use of AI apps in your business.

Get your free AI Policy template

Govern the use of AI Apps in your organisation. This policy can be used right out of the box, or completely customised to your needs.

Tekspace will never send you spam or share your email address with a third-party.

Sweeping cyber security strategies are two sides of the same coin

The ‘block everything‘ tactic might seem safe on the surface, but this approach can unexpectedly backfire.

When we outright deny access to useful tools, we’re encouraging our staff to find unofficial, alternative ways around these roadblocks.

This is how the ‘shadow IT’ emerges.

What’s ‘shadow IT’? It’s the unsanctioned use of tech tools that fly under the IT department’s radar, and can unintentionally lead to even bigger security risks than a more relaxed policy might.

Moreover, as AI apps become more business critical, cutting off access to these revolutionary tools means missing out. You’re essentially denying your team – and therefore, your business – the opportunity to hone skills for effectively using these AI tools.

But what about the ‘allow everything‘ approach?

While this strategy fosters innovation and skill-building, it does need careful supervision to rein in any associated security risks. So, what’s the answer to this dilemma? Let’s delve deeper.

Using AI Apps: How to Keep Your Data Secure

When it comes to managing AI security risks, it’s all about walking the tightrope. You want to give your team the freedom to use these cutting-edge tools, but without putting your precious data at risk. So, how do you find this sweet spot?

1. Employee Education on the Risks of Generative AI

Embracing AI calls for an enhanced understanding of the risks, and the strategy is employee awareness and education.

Put simply, your team’s expertise needs to level up in two key areas.

First, they must understand that the moment sensitive corporate or customer data is used with these tools, it has left the confines of your environment. In other words, using sensitive data with AI apps means leaking the data to the outside world. At minimum, this could be a risk to your intellectual property. But it could also mean breaching a confidentiality clause, or local privacy law.

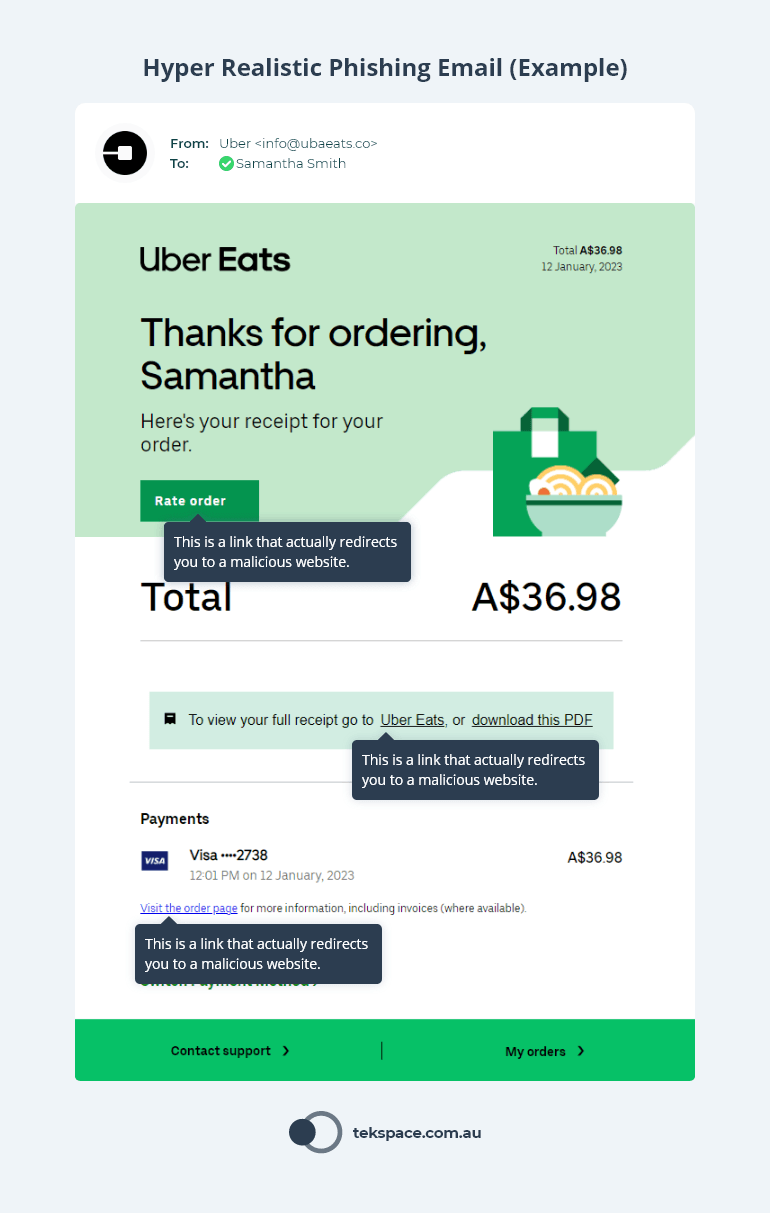

Second, let’s not forget these advanced tools aren’t solely in the hands of your employees; bad actors also have access.

This introduces a new wave of intricate threats, from ultra-realistic phishing and malware attacks to the advent of ‘Deep Fakes’.

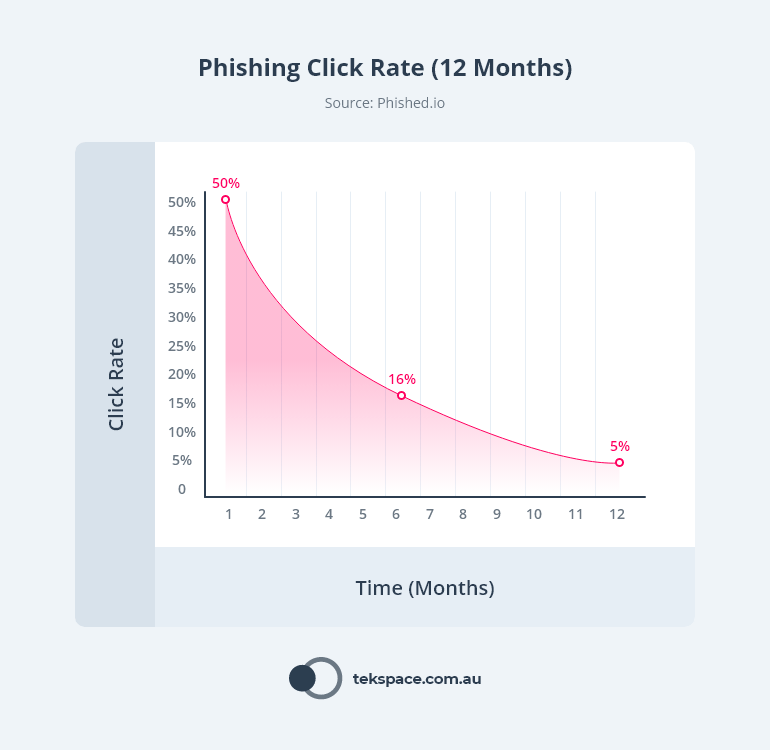

Leading security awareness solutions like Phished are continuously releasing new phishing simulations and training to keep your staff ahead of advanced threats, recognise and promptly report any suspicious emails or activity.

When we equip our staff with the right knowledge, they can transform from our most significant cyber security risk into our most valuable defense assets.

In fact, research from Phished shows that phishing click-rates can reduce by as much as 95% after 12 months of simulations and training. Want to learn more? Book a demo here.

2. AI and Sensitive Data Policy

An important strategy for minimising the risk of unintentional data exposure is implementing clear governance over the use of AI apps.

Company policies are a fantastic tool for clearly articulating (and enforcing) your organisation’s position on the use of AI.

At Tekspace, we call this an ‘AI and Sensitive Data Policy’, which should include all of the following:

- Policy Statement: Explain the intent of the document.

- Define ‘Sensitive Data’: Describe what ‘sensitive data’ means in the context of your organisation.

- AI App Usage Guidelines: Outline what staff can, and cannot do, with AI apps.

- Approved AI Apps: List the AI Apps that are approved in your organisation, within the boundaries of the rules set out in the policy.

- Policy Enforcement: Clearly state what’s on the line if the Policy is breached.

To get started, you can download our template (see below), then follow these steps:

- Identify Stakeholders: Collaborate with all relevant parties in your organisation to define what data is considered sensitive.

- Draft the Policy: Using our template, clearly outline the restrictions on the use of sensitive data with AI tools. Detail the consequences of policy violation to underscore the importance of adherence.

- Communicate the Policy: Share the policy across all teams and ensure its understanding. Hold training if necessary. Clarity is paramount in data security.

Remember, a thoughtfully created policy serves as a strong barricade, safeguarding your sensitive data while you leverage the productivity benefits of generative AI apps.

Now, grab a copy of the template so you can get started!

Get your free AI Policy template

Govern the use of AI Apps in your organisation. This policy can be used right out of the box, or completely customised to your needs.

Tekspace will never send you spam or share your email address with a third-party.

3. Role-Based Access (Zero Trust) to AI Apps

AI Apps are productivity powerhouses, but they aren’t necessary (or even useful) for all roles in your organisation.

And the more people with access to these tools, the bigger your cyber security risk.

This is why cyber security best practice suggests a ‘zero trust’ approach for access to corporate data and services. In simple terms, only staff who need resources (by way of their function) should have the ability to access them.

Imagine your marketing team for a moment.

They might see ChatGPT as an invaluable collaborator for generating engaging content. But contrast that with front-line staff who may have little use for such tools.

So how can you ensure that these AI apps can only be accessed by those who need them? Well, here is where the plot thickens.

You’d be forgiven if your mind immediately jumped to identity management services (like Microsoft Active Directory or Okta). But unlike enterprise software, most AI apps don’t yet offer the ability to connect with these services and manage user accounts across your organisation – limiting control over access based on role or group.

But let’s suppose these AI apps did offer these types of controls. Employees could easily circumvent them by creating their own user accounts using their personal email addresses (which your organisation has no control over).

Of course, you could set up some firewall rules to block access to these websites, but with our increasingly mobile workforce this approach has it’s limitations.

And so the conundrum remains. How can you ensure that only employees who require these apps have access to them?

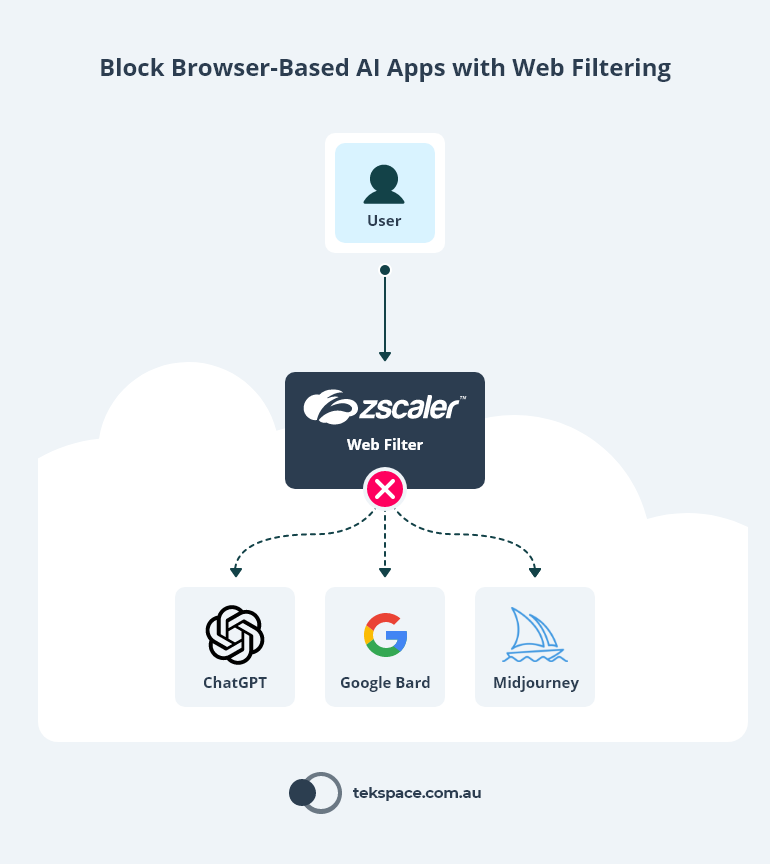

The answer lies in web filtering technology (such as Zscaler).

These tools give business’ the ability to centrally manage employee access to all web content, including browser-based AI apps like ChatGPT and Google Bard.

The moment unauthorised staff try to access their favorite AI app, web filtering will block them.

4. Create an In-House AI App

Of all the strategies we’ve discussed, one stands out as a considerable investment: Creating your own in-house AI tool.

Sounds intense? Maybe, but it might just make perfect sense for your business.

If using tools like ChatGPT with sensitive data would truly boost productivity, then constructing an in-house AI tool could be a worthy venture.

From the safety of your own IT environment, staff can use AI Apps (and Large Language Models, like GPT) to explore and extract insights from your proprietary data.

And it’s all possible thanks to APIs from platforms like OpenAI, which do most of the AI-powered heavy-lifting for you.

Here’s a straightforward roadmap to implementing your own in-house AI tool:

- Evaluate Your Needs: Understand the specific needs of your organisation. What tasks do you envision your in-house AI tool performing?

- Consider the Costs: Weigh the potential benefits against the resources required for development and maintenance.

- Assemble a Team: Gather a group of skilled AI and data professionals. They’ll be the architects and builders of your tool. You could choose to outsource this work too.

- Secure Your Environment: The tool should operate within a secure, sandboxed environment, keeping your data safe.

- Test and Implement: Before the full roll-out, test the tool extensively. Make necessary adjustments, and you’re good to go.

In essence, creating an in-house AI tool is like crafting your own high-tech Swiss Army knife—customized to your needs and safe in your hands.

Get more insights like these

Receive advice from our industry-leading cyber security experts.

Tekspace will never send you spam or share your email address with a third-party.